I’ve often heard this sentence from the people around me: “I wish I had a few more like myself or like my friend.” This sentence expresses the dream of many people. Creating a being or something that resembles what we want. Now, it seems that with the development of artificial intelligence models and their diverse functions, we humans have come very close to achieving this dream. Artificial human, or the same humanoid robot with artificial intelligence. This article examines this topic. How can we build one like ourselves with artificial intelligence. Of course, the artificial kind.

From natural man to artificial man

The construction of humanoid robots has been a focus of researchers and specialists for years. Approaching human-like performance and even surpassing humans has been a primary goal in building these robots. Companies like IBM have been striving to build robots for years. But what we’re talking about are humanoid robots that behave exactly like humans. They talk, listen, analyze, laugh, cry, and so on… of course, the artificial kind. What created the gap between industrial robots and artificial humans—or the same humanoid robots—is known today as artificial intelligence. Artificial intelligence has filled this gap, and it is predicted that with the development of language models, soon most tasks that natural human intelligence performs will be doable by artificial intelligence. Right now, in 2025, you are talking to an artificial intelligence. You write, and language models respond to you. You use your voice, and the AI talks to you like a human. You provide an image, and the AI analyzes it. It recognizes colors. It detects smells. With sentiment analysis, it understands your emotions and can give appropriate responses to your feelings. If we continue this way, distinguishing between AI and a human becomes difficult. Now imagine this AI integrated with robotics. It becomes the same artificial human or humanoid robots that perform most human activities and can act exactly like a human. In this article, we want to examine this topic: what is the current status of artificial humans and what status they will have in the near future. We will conduct this examination technically and in detail to give you a clear picture of the future.

Language models are the first and most basic technology for producing artificial humans.

The first and most basic concept for producing artificial humans is the language models that are being used by most humans today. Currently and in 2025, according to the datareportal report, more than 1 billion people in the world are using artificial intelligence and its various tools. Numerous language models have been published, each of which performs a specific task or is multi-tasked. Such as GROK, GPT, etc., which are trained as multi-taskers. The ability to read text, voice, convert face to text, text to voice, produce video from text, produce and visualize text in reverse, these are all tasks that can be performed by artificial intelligence language models. These tasks are actually the same tasks that natural human intelligence performs. As you read this text, you are currently converting the text into a perception in your mind. Existing artificial intelligences can also analyze your text and understand your meaning. You can understand the sound of a car passing by your window. Existing artificial intelligences that are specialized in the task of analyzing sound can also recognize this sound. You can recognize the colors around you, existing artificial intelligences can also perform this task, and as we move towards the future, these abilities are being strengthened and increased in accuracy, as it is predicted that the power of artificial intelligence will be greater than humans in some cases. For example, you may only realize that a car is passing by from the sound of a car, but artificial intelligence can also understand the type of car from the sound of a car. Now let’s implement this ability on robots. A robot that can perform tasks related to intelligence better than humans in some cases. Now you have a robot that is intelligent. Even smarter than you!!!!!

Artificial skin

One of the topics that has been raised in recent years regarding humanoid robots is the creation of artificial skin that has the ability to recognize touch and pressure just like the natural skin of humans. One of these examples is optical/electronic artificial skin, which has enhanced the robots’ sensing capabilities from the environment. This technology possesses the ability to sense touch, temperature, and pressure. This technology enables robots, by combining fiber optic technologies, pressure sensors, and flexible materials, to perceive and analyze the environment. If you are interested in learning more, you can read the related article on this topic from this link. So, we are very close to developing artificial skins. Now, imagine these skins are added to robots. The robot can sense pressure, touch, amount of light, and so on—which is the function of human skin—and through this, better evaluate and analyze environmental events. Now, with 3D printers, we can create skin exactly like your face. So, up to this point, we have reached artificial intelligence and artificial skin.

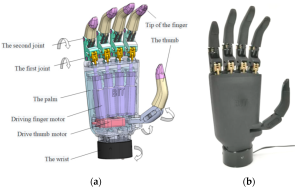

Artificial limb

Let’s move on to the discussion of artificial limbs. Now that we have a robot that has artificial intelligence and artificial skin, we need artificial limbs to make the function more natural and complete. The first discussion in developed robots was related to maintaining balance while walking and running. Currently, the Tesla Optimus robot has been able to show good performance in this area. This robot can run, move and perform many tasks on its two legs like humans. One of the technologies produced in this area is the artificial leg developed by the University of Michigan, which maintains balance with IMU sensors and consumes less energy.

In the case of artificial hands, efforts have also been focused on increasing manual dexterity and tactile sense. The Japanese bionic hand is one of these technologies, developed in 2025 by the OSAKABASED company. These hands can perform hand movements similar to humans with very high precision. It is predicted that artificial hands will be able to perform more naturally and better than natural hands by 2030 using machine learning. The ability to understand environmental information in a deeper way, along with obtaining more information from the environment in more detail, are features that make these hands even more superior to human hands.

Another technological marvel in recent years has been related to artificial noses. These noses, with artificial intelligence models and combined with sensors, have been able to recognize scents with high power and identify various odors with high accuracy. These noses have been used for application in production lines. Korean researchers at the DGIST research institute have designed an artificial nose with high recognition power.

Artificial eyes and ears are also one of the most important technologies that have been developed in recent years. Many AI streaming models can now see the surrounding environment well using a camera. You can already use these capabilities in the models used by Google AI Studio. AI is able to see and analyze the environment well. If you hold a mobile phone in your hand, AI can recognize this. Object recognition models and AI streaming models have been able to create capabilities for artificial eyes and ears. Now the models can see and see. The more we go forward, the more accurate these models become. Now we have an AI, an artificial skin and a number of artificial organs that can perform human tasks even better and more than humans. Now the question that arises is what will happen to the sense? Can we also transfer sense to AI?

Artificial emotions

One of the things that artificial intelligence scientists always raise is that perhaps artificial intelligence cannot understand emotions like humans. But in recent years, artificial intelligence models have been presented that have the ability to recognize emotional states from the way they are written. These models are expressed in the form of sensitivity analysis methods. Existing sensitivity analysis can determine, based on the written text, whether the author’s state or the written text is a happy post or a post that shows the author’s anger. This is also a complete evolution in artificial intelligence models for understanding emotions. Artificial intelligence models at present can conclude about the type of person’s emotion by obtaining information from the face, voice, text, and a combination of these, and these models are being developed with high accuracy. For example, multi-modal fusion systems are an example of these models that have been used in Tesla cars to examine and detect driver fatigue while driving. It is predicted that these models will develop more quickly and accurately in recognizing people’s emotions, so perhaps one day we can reach a point where artificial intelligence can understand most of the emotions of people and provide appropriate responses accordingly. We have moved from intelligence, skin, and artificial limbs to emotions, which are the soft aspects of artificial humans. Now we have a robot that has skin like ours, can talk and move like ours, and has organs like ours with similar or greater functionality, and can ultimately recognize emotions and generate appropriate responses. Now it seems we are very close to an artificial human.

Artificial fingerprint

A fingerprint has wavy lines, end points, and branches that combine to create a unique fingerprint. But deep learning models can analyze the fingerprint by evaluating these unique patterns and lines and design and implement these fingerprints for robots using 3D printers on an elastomeric polymer or surface nanostructures. This is where things get a little scary. Suppose robots can now learn your fingerprint and misuse your identity. But it is still a possibility that could exist. Now we have a humanoid robot. With strong intelligence and the ability to see and hear and smell and understand emotions with artificial limbs and skin like ours (with 3D printers) and eventually a fingerprint like ours. What else?

Faster and more comprehensive learning by artificial humans

Now that you are familiar with humanoid robots with artificial intelligence and its components, the question arises: Can we design these robots to be stronger than humans? The answer is that in many cases, yes. Artificial intelligence robots can use a variety of language models that are connected to the world of big data and can analyze at high speed using huge infrastructure capacities. A task that is difficult for many humans or leads to fatigue after a while. But the machine only needs to be charged to be able to perform non-stop activity for days, weeks or years. Rapid learning using reinforcement learning and its new methods allows artificial intelligence to learn and correct itself at high speed based on feedback. A subject that can be implemented at high speed and even act stronger than a human in terms of mental power.

Imitation of behavior, speech, activities, mindset, etc. by artificial intelligence

We have come to an interesting topic. Artificial intelligence can learn from data to perform a specific task effectively and with high accuracy. Suppose an artificial intelligence examines your behaviors, speech, and activities in the form of video, audio, and visual data and extracts and learns from you. The result? Artificial intelligence can exactly copy your behaviors, speech, and activities and behave in a way that looks like you. What will happen?

Predictions for the future of artificial humans

Now that we can create humanoid robots with artificial intelligence that can walk, see, learn, move, have a face like us with skin like us, have our fingerprints, speak with our tone, and so on, we can produce models like us or better than us and direct them for environmental activities outside the home and workplace. Imagine designing a humanoid robot model with artificial intelligence that looks exactly like you and can, based on the data it is connected to, attend meetings instead of you, score high on college exams, do your banking, and drive instead of you. All of this has become possible or is becoming possible. Factories producing humanoid robots with artificial intelligence are being created that rapidly produce new versions of these robots. Like cell phone models that are updated every year or every other year. In 10 years, we will say that the robot factory has created a new robot that is completely ours and does all our work. This is no longer an impossible dream. We are very close. Very

Dr. Mehran Shirzad

DIGINORON Manager

This is a good idea. Of course, it may be scary. But technology has both fear and benefit.