Table of Contents

ToggleIn the general sense, AI Stream refers to the continuous (real-time) processing and analysis of data streams using artificial intelligence algorithms. This approach employs architectures and tools capable of receiving and preprocessing input data—such as live video, sensor signals, banking transaction logs, and so on—in real time, and then performing inference or even new learning based on machine learning or deep learning models.

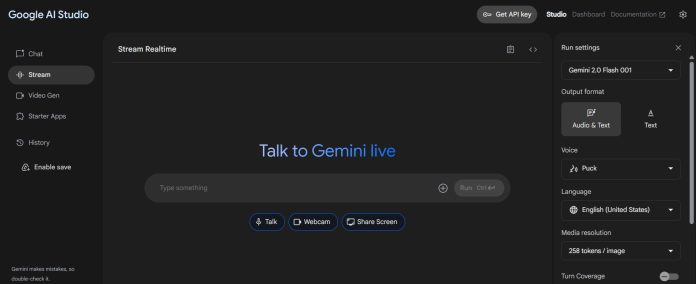

Put more simply, you can converse with AI Stream live, share video, and ask the AI questions that it answers instantly. This is a major marvel in the realm of AI tools that can create a tremendous transformation in today’s businesses. In this article, we aim to explore this concept and its functionalities across various industries.

Importance and Key Functions of AI Stream

AI Stream has become a critical tool across many industries and sectors due to its ability to deliver real-time analysis and decision-making. In financial and banking systems, fraud detection occurs in fractions of a second, preventing massive losses. In video surveillance and urban security, live video processing helps identify and track individuals or suspicious events, enabling security forces to respond quickly. Likewise, in industrial IoT, sensor data is analyzed on the spot to detect any signs of equipment inefficiency or failure before a disaster occurs, allowing for timely predictive maintenance.

On the operational layer, AI Stream relies on components such as data ingestion, instant preprocessing, real-time inference by machine-learning models, and storage of results in specialized databases. This architecture ensures high scalability and robust fault tolerance in distributed environments. Frameworks like Apache Flink and Kafka Streams handle data-stream management and state management, while services such as TensorFlow Serving prepare trained models for rapid inference. This combination allows the system to maintain zero or near-zero latency even as data volumes increase.

Technical Architecture of AI Stream at a Glance

In a technical architecture of “AI Stream,” several key layers and components work together to stream data from the moment it’s generated to real-time inference:

Data Ingestion Layer

- Protocols & Tools: Typically uses Apache Kafka, Amazon Kinesis, or RabbitMQ to write and read continuous batches of messages.

- Load Balancing: Inputs are distributed across multiple clusters to prevent bottlenecks.

- Diverse Origins: CCTV cameras, IoT sensors, log servers, external APIs, and even compressed files.

Pre-Processing / Edge Processing Layer

- Cleaning & Filtering: Removing initial noise—for example, empty frames or corrupted video data.

- Format Conversion: Encoding/decoding video (H.264 → raw frames), JSON → Avro/Protobuf for higher efficiency.

- Edge Computing: If latency or bandwidth is an issue, initial processing (e.g., simple motion detection) runs on edge devices, sending only essential data to the central system.

Stream Processing Engine Layer

- Processing Engines: Apache Flink or Spark Structured Streaming, capable of stateful and windowed processing.

- State Management: Maintaining aggregate state—e.g., event counts over the past minute—using automatic checkpointing and durable state storage.

- Dynamic Scalability: Scaling nodes out or in based on input volume with minimal interruption.

Model Serving / Inference Layer

- Model Servers: TensorFlow Serving, TorchServe, or Kubernetes-based solutions (e.g., KFServing).

- Batch vs. Online Inference: For streaming data, online inference handles individual requests in real time.

- Model Hot-Swap: Canary releases or blue-green deployments enable swapping in new models without downtime.

Storage & Sink Layer

- Real-Time Databases: Cassandra or Elasticsearch for storing results and enabling fast queries.

- Data Lake: Storing raw data and processed results in S3 or ADLS for offline analysis.

- Dashboards & Alerts: Integrating with Grafana/Prometheus or Kibana to display KPIs and configure real-time alerts.

Orchestration & Monitoring Layer

- Containers & Kubernetes: All services run in Docker containers managed by Kubernetes.

- CI/CD for Code & Models: Tools like Jenkins or GitHub Actions automate deployment after every code or model update.

- Logs & Metrics Aggregation: Collecting logs (ELK Stack) and metrics (Prometheus) to track service health and response times.

AI Stream and Future Businesses

One of the most exciting aspects of AI Stream is its impact on businesses. In this section, we have sought to examine the effects of this artificial intelligence on various industries.

AI Stream and Education

AI Stream and Home Repair Services

Many times, I’ve faced a technical issue with one of my household appliances. When I contacted a repair technician, they often asked for images or a description of the problem area. Now, imagine if AI could perform this task live. You could point your smartphone camera at the malfunction, and ask AI to guide you through the issue. In this case, you could identify many initial defects or, based on the AI’s analysis, make the right request for selecting the appropriate repair technician.

AI Stream and the Interior Design Industry

It has definitely happened that you want to make a decision about a part of your home decor. In these situations, you usually seek advice from friends. Now, imagine being able to focus your smartphone camera on a corner of your home and ask AI to provide creative suggestions based on the millions of data points it has. With a few back-and-forth interactions with AI, you can receive opinions and generate new, attractive ideas with its help. In my opinion, one of the industries that will be heavily impacted by AI is interior design and decoration.

AI Stream and the Cosmetics and Health Industry

Imagine focusing your smartphone camera on your face and asking AI to analyze your skin for imperfections or suggest makeup techniques based on your face type. AI can provide the best recommendations, drawing from millions of registered data points. My prediction is that soon, many people will turn to AI for makeup advice, leading to a transformation in the beauty industry.

Other Functions of AI Stream

There are many examples I can provide for this transformative technology, ranging from military applications, such as real-time target identification and real-time adjustment to moving targets in seconds, to saving patients in critical situations with AI-powered instant solutions. Additionally, AI Stream can offer real-time consultations in fields like education and law. It seems that the future of AI will be revolutionized with this technology. Imagine robots that instantly receive and analyze environmental information through their 360-degree cameras and generate appropriate responses. Now, think about how this technology could transform your business.

Dr. Mehran Shirzad

Diginoron Manager

Hassan Salavati

Ai Consultant

practical and useful. thank you for your good article 🙂