Reinforcement Pretraining (RPT): A New Revolution in Pretraining Large Language Models

In machine learning, large language models (LLMs) such as GPT-4 and Claude have been able to generate human-like responses from vast amounts of text data. But can the predictive power of these models be improved more effectively than through conventional training? Researchers at Microsoft and Peking University have answered this question positively with a new solution called Reinforcement Pretraining (RPT).

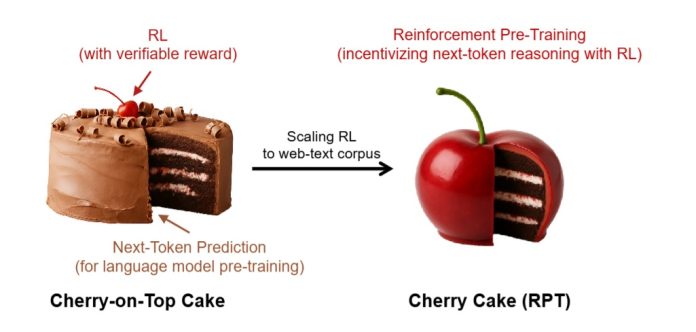

What is RPT? Reimagining Language Model Training

Language models were previously trained just to predict the next token (word or symbol). RPT turns this into a reasoning process. Instead of simply guessing, the model “thinks,” forms hypotheses, and then performs the next-token prediction.

This model is trained using reinforcement learning with a major shift: rewards are generated from the text data itself, not from humans or other models.

Why is RPT important?

-

Precise and strict rewards: This method does not reward predictions that are merely close to the actual data unless they are exact, unlike other models that might trick or manipulate the reward system by copying or mimicking responses.

-

High scalability: It uses the same standard text data (e.g., books or Wikipedia), but by converting them into “reasoning problems,” it enhances the quality of training.

-

Improved prediction performance: The RPT-14B model may outperform even more powerful and larger models like R1-Qwen-32B in tests.

-

Enhanced Zero-Shot answer capability: This means the model may answer many different questions, even if it has not seen similar examples during training.

Key Experiments

Researchers used datasets like OmniMATH and challenging benchmarks such as MMLU-Pro and SuperGPQA to test the RPT method. The performance was remarkable:

-

Its token prediction accuracy on hard data was up to 3% better than base models.

-

In Zero-Shot tests, the RPT model even outperformed the 32B base model by up to 22%.

How RPT Thinking Differs from Traditional Methods

One of the most fascinating parts of the article that underlies this discussion was the explanation of RPT’s “model thinking” tendencies. For instance, the RPT model not only formulates hypotheses but also demonstrates tendencies of “reflection,” “logical inference,” and “divergent thinking” in its text.

The Future of RPT: Just the Beginning

Although initial experiments were successful, the authors note that there is still much room for improvement in RPT:

-

Inclusion of more general text data beyond just mathematical content

-

Experiments with larger model sizes

-

Integration of RPT with “compositional reasoning” methods

In conclusion, RPT marks a revolution in training natural language models. By introducing step-by-step reasoning in the early stages of training, it forces models to understand more deeply—not just copy more effectively. If you’re concerned about the future of AI, RPT is something to watch closely.

Source: Original paper published on arXiv with ID 2506.08007.